.NET developers usually know they should measure code performance using a Stopwatch class from the System.Diagnostics namespace. From time to time though I see code where someone uses DateTime instances for this purpose. And it’s not very surprising as DateTime class is usually the one that comes to mind when you think of time in .NET. In today’s post I would like to show you the difference in accuracy of both those approaches and the price you need to pay using either of them. We will work on this sample code that does nothing but measure time :):

#define TRACE

using System;

using System.Diagnostics;

using System.Diagnostics.Eventing;

using System.IO;

using System.Linq;

using System.Windows.Forms;

using System.Threading;

public class Class1 {

public static void Main(String[] args) {

bool fout = false;

if (args.Length > 0 && String.Equals(args[0], "-tofiles", StringComparison.OrdinalIgnoreCase)) {

fout = true;

}

const String providerId = "2c0a81aa-1f56-4e36-a4b5-f2e3787f887e";

const int loop = 100000;

Trace.Listeners.Clear();

Trace.Listeners.Add(new EventProviderTraceListener(providerId));

Console.WriteLine("Stopwatch frequency: {0:#,#} ticks / s", Stopwatch.Frequency);

Console.WriteLine("DateTime: 10 000 000 ticks / s");

Console.WriteLine("Loop count: {0:#,#}", loop);

Console.WriteLine("---------------------------------------------------");

int i,j,d;

i = j = d = 0;

var deltas = new int[loop];

var times = new long[loop];

var ldt = DateTime.UtcNow.Ticks;

var startdt = ldt;

while (i < loop) {

var cdt = DateTime.UtcNow.Ticks;

if (ldt != cdt && i > 2) {

int delta = (int)(cdt - ldt);

deltas[i] = delta;

if (delta > 100) {

Trace.TraceInformation("Delta: {0}", delta);

}

ldt = cdt;

d = j;

} else {

j++;

}

times[i] = cdt;

i++;

}

ldt = DateTime.UtcNow.Ticks;

Console.WriteLine("DateTime start ticks: {0}", startdt);

Console.WriteLine("DateTime ticks: {0:#,#} | ms: {1} | missed loop iterations: {2:#,#}", ldt - startdt, (ldt - startdt) / 10000.0, j);

var q = deltas.Where(e => e > 0);

if (q.Any()) {

Console.WriteLine("Timer deltas | min: {0}, max: {1}, avg: {2}", q.Min(), q.Max(), q.Average());

}

if (fout) {

DumpToCsvFile("datetimes.csv", times);

DumpToCsvFile("datetimes-deltas.csv", deltas);

}

Console.WriteLine("---------------------------------------------------");

i = j = d = 0;

Array.Clear(deltas, 0, deltas.Length);

Array.Clear(times, 0, times.Length);

var sw = new Stopwatch();

sw.Start();

var ls = sw.ElapsedTicks;

var starts = ls;

while (i < loop) {

var cs = sw.ElapsedTicks;

if (ls != cs && i > 2) {

int delta = (int)(cs - ls);

deltas[i] = delta;

if (delta > 100) {

Trace.TraceInformation("Delta: {0}", delta);

}

ls = cs;

d = j;

} else {

j++;

}

times[i] = cs;

i++;

}

ls = sw.ElapsedTicks;

Console.WriteLine("Stopwatch start ticks: {0}", starts);

Console.WriteLine("Stopwatch ticks: {0:#,#} | ms: {1} | missed loop iterations: {2:#,#}", ls - starts, (ls - starts) * 1000.0d / Stopwatch.Frequency, j);

q = deltas.Where(e => e > 0);

if (q.Any()) {

Console.WriteLine("Timer deltas | min: {0}, max: {1}, avg: {2}", q.Min(), q.Max(), q.Average());

}

if (fout) {

DumpToCsvFile("stopwatch.csv", times);

DumpToCsvFile("stopwatch-deltas.csv", deltas);

}

}

private static void DumpToCsvFile<T>(String fname, T[] times) {

using (var sw = new StreamWriter(fname)) {

for (int i = 0; i < times.Length; i++) {

sw.WriteLine("{0,9},{1}", i, times[i]);

}

}

}

}

Don’t worry if some parts of the code seem strange and unnecessary – I will explain them later. For now let’s focus on two while loops. We simply iterate a given number of times, each time checking if our timer (DateTime.UtcNow.Ticks in the first loop, Stopwatch.ElapsedTicks in the second loop) has changed since last iteration. If it had then we store information about the time increase and if it’s higher than 100 we send a trace event. We skip first two iterations as from my observations they did not provide valid data. After each loop we print on the output the number of passed ticks, their representation in milliseconds and the number of “missed loops” – i.e. loops for which the timer elapsed value hadn’t changed. We also provide information on the minimal, maximal and average recorded time increase. Now, before we look at some statistics I gathered, think what numbers would you expect to see.

Time to measure

My desktop machine, on which I conducted the experiment, is 3-years old and the loop with 100 000 iterations was enough for it – you may need to make the number higher to observe similar results on your hardware. Here is coreinfo output for my desktop (I stripped unnecessary information):

> coreinfo

Pentium(R) Dual-Core CPU E5300 @ 2.60GHz

Intel64 Family 6 Model 23 Stepping 10, GenuineIntel

...

Maximum implemented CPUID leaves: 0000000D (Basic), 80000008 (Extended).

Logical to Physical Processor Map:

*- Physical Processor 0

-* Physical Processor 1

Logical Processor to Socket Map:

** Socket 0

Logical Processor to NUMA Node Map:

** NUMA Node 0

Logical Processor to Cache Map:

*- Data Cache 0, Level 1, 32 KB, Assoc 8, LineSize 64

*- Instruction Cache 0, Level 1, 32 KB, Assoc 8, LineSize 64

** Unified Cache 0, Level 2, 2 MB, Assoc 8, LineSize 64

-* Data Cache 1, Level 1, 32 KB, Assoc 8, LineSize 64

-* Instruction Cache 1, Level 1, 32 KB, Assoc 8, LineSize 64

Logical Processor to Group Map:

** Group 0

And results from three executions of our TestClock.exe appliction:

PS cpu-lab> .\TestClock.exe

Stopwatch frequency: 2 539 156 ticks / s

DateTime: 10 000 000 ticks / s

Loop count: 100 000

---------------------------------------------------

DateTime start ticks: 635322737464673264

DateTime ticks: 9 991 | ms: 0,9991 | missed loop iterations: 99 999

Loop count deltas | min: 42934, max: 42934, avg: 42934

Timer deltas | min: 9991, max: 9991, avg: 9991

---------------------------------------------------

Stopwatch start ticks: 3

Stopwatch ticks: 169 436 | ms: 66,7292596437556 | missed loop iterations: 11

Loop count deltas | min: 11, max: 11, avg: 11

Timer deltas | min: 1, max: 454, avg: 1,69452639790377

PS cpu-lab> .\TestClock.exe

Stopwatch frequency: 2 539 156 ticks / s

DateTime: 10 000 000 ticks / s

Loop count: 100 000

---------------------------------------------------

DateTime start ticks: 635322737474030645

DateTime ticks: 19 995 | ms: 1,9995 | missed loop iterations: 99 998

Loop count deltas | min: 14710, max: 62555, avg: 38632,5

Timer deltas | min: 9987, max: 10008, avg: 9997,5

---------------------------------------------------

Stopwatch start ticks: 2

Stopwatch ticks: 168 041 | ms: 66,1798644904055 | missed loop iterations: 11

Loop count deltas | min: 11, max: 11, avg: 11

Timer deltas | min: 1, max: 434, avg: 1,68057486323496

PS cpu-lab> .\TestClock.exe

Stopwatch frequency: 2 539 156 ticks / s

DateTime: 10 000 000 ticks / s

Loop count: 100 000

---------------------------------------------------

DateTime start ticks: 635322737482013380

DateTime ticks: 19 995 | ms: 1,9995 | missed loop iterations: 99 998

Loop count deltas | min: 15856, max: 67295, avg: 41575,5

Timer deltas | min: 9991, max: 10004, avg: 9997,5

---------------------------------------------------

Stopwatch start ticks: 3

Stopwatch ticks: 169 051 | ms: 66,577634458064 | missed loop iterations: 11

Loop count deltas | min: 11, max: 11, avg: 11

Timer deltas | min: 1, max: 453, avg: 1,69067597435718

First, notice how many loop iterations were required to observe a change in DateTime.UtcNow.Ticks. In 100 000 iterations there were 1-2 changes. The time delta for the system timer depends on the current clock resultion which on my machine at the time of the test was around 1ms. DateTime tick is always 0,0001 ms and you can see that timer deltas for DateTime were around 10 000 ticks. You may check the current clock resolution using clockres (another great tool from sysintenals):

> clockres

ClockRes v2.0 - View the system clock resolution

Copyright (C) 2009 Mark Russinovich

SysInternals - www.sysinternals.com

Maximum timer interval: 15.625 ms

Minimum timer interval: 0.500 ms

Current timer interval: 1.000 ms

Now imagine that for some reason (usually energy consumption) your current clock resolution was set to 15ms. Measuring application code with 15ms accuracy may lead to really bad conclusions on its performance. And that’s the reason why Stopwatch class exists. Stopwatch uses a special High-Frequency Timer which interval on my machine is around 2 Stopwatch ticks (2 * 1 / 2539156 * 1000 * 1000 ~ 0,78 us – 2 539 156 is a Stopwatch frequency on my desktop). For DateTime we had stable ~10 000 ticks deltas, but for Stopwatch 95% deltas were 1-2 ticks with the other 5% reaching values above 100 ticks. In the next paragraph we will investigate further these discrepancies. Another thing from the above output that should attract your attention is the code execution time. Stopwatch loop is almost 30x slower than DateTime one. That shows that frequent querying for elapsed time using Stopwatch is not cost free. Of course we are talking about milliseconds here but keep that in mind.

Going deeper

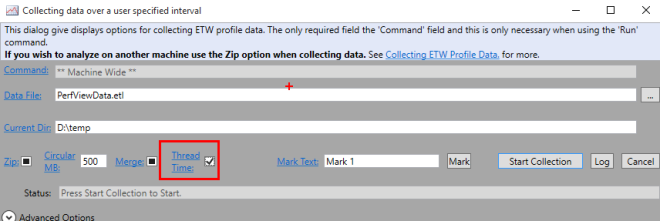

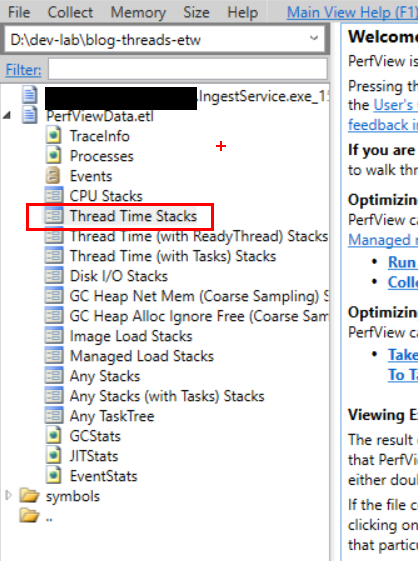

In order to investigate Stopwatch timer delays I prepared a following batch script (based on Andrew Richards CPU wait script). If you don’t have Windows Performance Toolkit installed (which is required to run the script) you may get it from ADK:

@echo off

echo Press a key when ready to start...

pause

echo .

echo ...Capturing...

echo .

xperf -on PROC_THREAD+LOADER+PROFILE+INTERRUPT+DPC+DISPATCHER+CSWITCH -stackwalk Profile+CSwitch+ReadyThread -BufferSize 1024 -MinBuffers 256 -MaxBuffers 256 -MaxFile 256 -FileMode Circular -f kernel.etl

xperf -start clr -on e13c0d23-ccbc-4e12-931b-d9cc2eee27e4:0x1CCBD:0x5+A669021C-C450-4609-A035-5AF59AF4DF18:0xB8:0x5 -f clr.etl

xperf -start myetl -on 2c0a81aa-1f56-4e36-a4b5-f2e3787f887e:0x1F00:0x1F -f my.etl

echo Press a key when you want to stop...

pause

echo .

echo ...Stopping...

echo .

xperf -stop

xperf -stop clr

xperf -stop myetl

xperf -merge kernel.etl clr.etl my.etl cpuwait.etl

del kernel.etl

del clr.etl

del my.etl

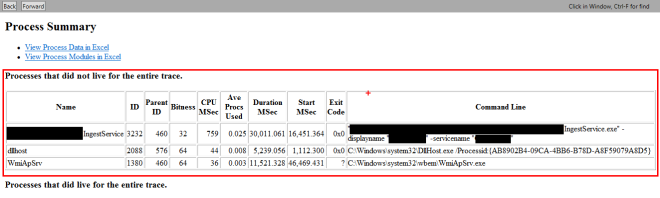

We will collect events from Windows kernel provider that describe process/threads states (PROC_THREAD+LOADER+PROFILE+INTERRUPT+DPC+DISPATCHER+CSWITCH), .NET events from .NET Runtime and .NET Rundown providers (those are needed to decode managed stacks) and our custom events from the 2c0a81aa-1f56-4e36-a4b5-f2e3787f887e provider, defined at the beginning of the Main method. As noted previously, anytime we encounter delta bigger than 100 ticks we send an ETW event using this provider. This will help us to find kernel events that happen at the same time. With them we should be able to inspect the source of the timer delays.

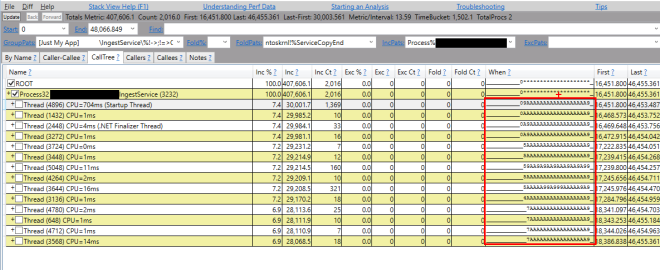

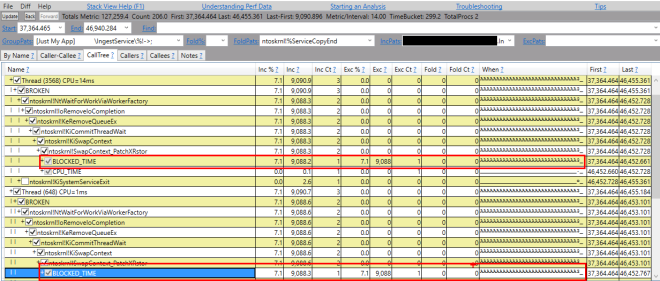

After trace collection I opened the cpuwait.etl file in Windows Perofmance Analyzer and grabbed the Generic events and CPU Precise graphs:

![events]()

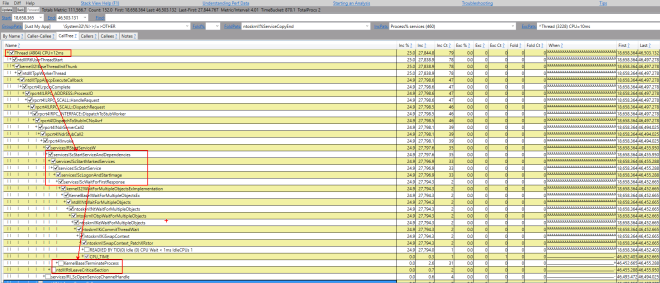

There are two seperate groups of events from our application provider. We may skip the first group as those were generated by DateTime loop and focus on one of the events from the second group (I rearranged columns in the summary table and sorted rows chronologically by SwitchInTime value):

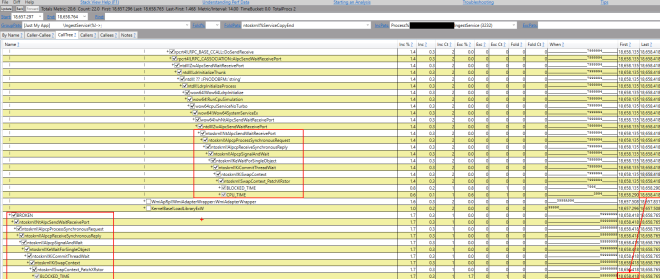

![context-switch]()

The image clearly shows that the delay in stopwatch timer was caused by a context switch in TestClock.exe between two processors. Notice also that the waiting time for the thread to start again equals 113 us which is more than 100 Stopwatch ticks and explains why the event was generated. This also proves that Stopwatch interval frequency is enough even for meticulous code profiling.

If you’d like to perform tests by yourself the WPA profile I used and the source code of the TestClock.exe is available for download on my blog’s codeplex page.

Filed under:

CodeProject,

Profiling .NET applications ![]()

![]()